Building A Data Lakehouse To Unify Data & Improve Reporting For A Global Retailer

What We Achieved

Reduced Time-To-Insights

Consolidated disparate data sources into a unified analytics platform, eliminating silos and streamlining data access. This enabled faster and more informed decision making.

Standardised Data Pipelines

Established consistent data pipelines to streamline the flow of data, cutting development time and ensuring faster, high-quality delivery of data products.

Developed Reusable Blueprints

Created reusable blueprints to accelerate future projects, promoting consistency and reducing the time and effort needed for new initiatives.

Improved Reporting Capabilities

Consolidated data into a single source of truth, enhancing reporting accuracy and reliability while reducing discrepancies and report generation time.

The Challenge

TL Consulting were engaged with a Global Retailer to design, build, and operationalise a Modern Data Analytics platform using Data Lakehouse technology with Databricks on the Azure platform.

One of the key visions for the platform was to enable open-source tools/platforms to be integrated that enable data quality and security, while providing enrichment on all data sources driven by robust data governance & metadata management.

The customer at the time were facing several business challenges & pain-points which are summarised below:

Lack of Data Governance – across the organisation with no defined metrics

Slow Time-To-Insight – with multiple data sources & systems and complex data ingestion processes, leading to inefficient and poor decision making to drive business value.

No Single Source of Truth – Multiple datasets representing key business functions had reporting discrepancies & poor data quality with lack of standardisation.

High Complexity – with several data sources each with its own complexity & heterogenous data structures which were not ingested & enriched in a uniform way.

The Data platform needed to support various datasets ingested using various ingestion patterns from multiple source systems including material master source, distribution centre data, customer payment transactional and financial data, with a required capability for data science teams to bring their own data (BYOD) to leverage self-service analytics.

The Solution

The project was co-funded using the Microsoft Azure Innovate offering, allowing our customer to receive a subsidy and subsequently reduce the financial burden of the project.

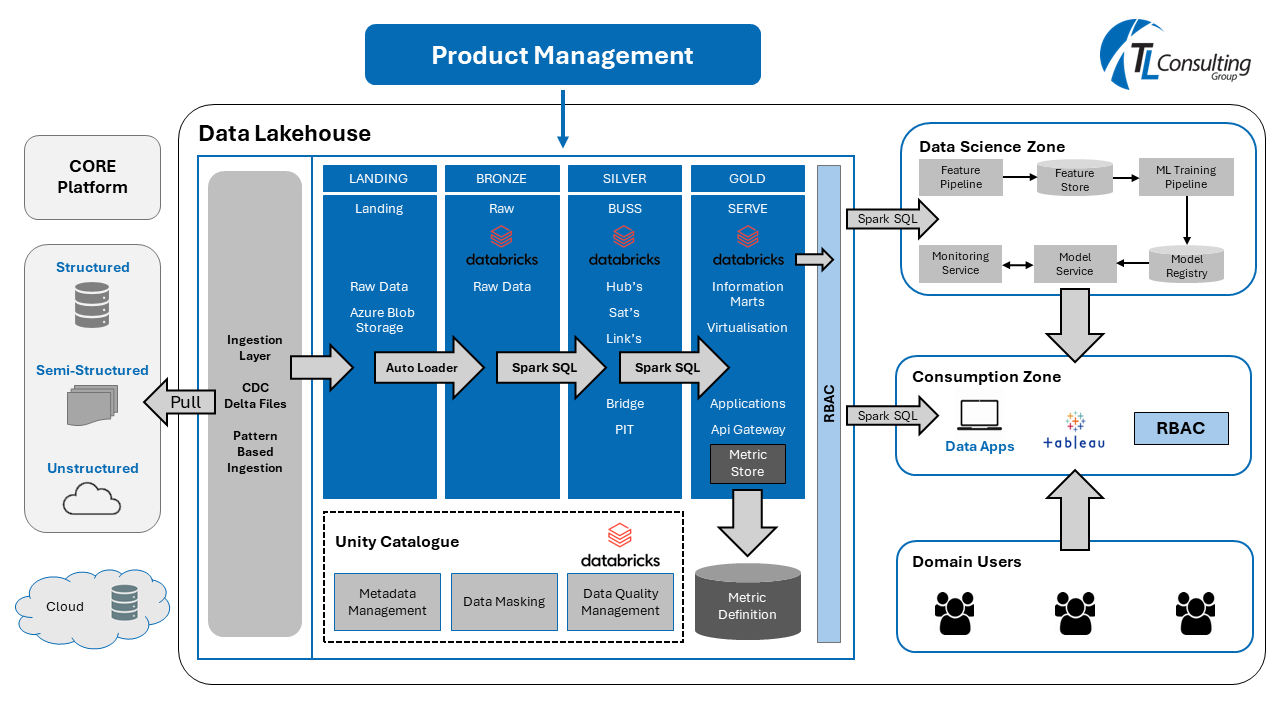

The solution delivered was a meta-data driven lakehouse built from the ground up using ELT design methodology. One of the key capabilities for the platform was to enable open-source tools/platforms to be integrated that enable data quality and test automation frameworks to enable these capabilities. TL Consulting delivered this engagement following a top-down strategic approach with the solution underpinned by industry best practices and architecture principles. By building a unified and future-ready lakehouse, the customer could accelerate innovation, gain deeper insights from their data, and enabled AI/ML capabilities and use cases.

The Outcomes

- Data Platform Solution Design & Build – In alignment to the business goals & objectives with clear definition of each technology component and architectural design patterns.

- Delivered Re-Usable Data Ingestion Patterns – Including a medallion architecture to ingest, transform and enrich historical and delta loads (supporting files, database and REST API), this supports pattern reusability and scalability for future data sources.

- Configured Delta Load Management – Using Autoloader within Databricks as a control framework to handle delta ingestion loads.

- Unified Metadata Management – Encompassing Automated Data Quality & Test Automation workflows integrated with Unity Catalog.

- Implemented Data Vault Modelling – To build a data warehouse for enterprise scale analytics.

- Designed the CI/CD workflow – Using Azure DevOps to automate code integration, testing & deployment to ensure rapid and reliable delivery.

- Implemented Microservice-Orientated Design Patterns – Enabling cloud-native, modular architecture to handle specific functions and therefore enhancing agility and resilience, enabling services to run autonomously and evolve independently when necessary.

Ready to enhance decision-making and operational efficiency with advanced data solutions? Contact us to see how we can build, transform and enrich your data using Microsoft Azure and Databricks, just as we did for this global retailer.

Accelerate Cloud Adoption with Financial Accelerators

As specialised Microsoft Solutions Partners, we have access to the Azure Migrate and Modernize & Azure Innovate offerings, enabling us to secure subsidised incentive funding for Proof of Values/PoCs, MVPs, and migrations to further enable your business. This funding catalyst, combined with our expertise, accelerates your delivery model with TL Consulting at it’s core.

Other Case Studies

- Cloud-Native

- Data & AI

- DevSecOps

- News

- Uncategorised

Learn how we conducted a proof of value with a major Australian bank to adopt GitHub Copilot for faster development, fewer errors and lower costs.

Discover how we helped a major Australian entertainment company achieve regulatory compliance & enhance process automation through Azure integrations.